Knative: Serverless for Kubernetes — an overview and launch in Minikube

What is Serverless and Knative overview - a system for implementing Serverless in Kubernetes: components and working with Knative Eventing

Knative is a system that allows you to use the Serverless development model in Kubernetes. In essence, Knative can be imagined as another level of abstraction that allows developers not to dive into the details of deployment, scaling, and networking in “vanilla” Kubernetes.

The development of Knative itself was started at Google with the participation of companies such as IBM, Pivotal, Red Hat, and has a total of about 50 contributing companies.

What is: Serverless computing

But first, let’s consider what Serverless is in general.

So, Server and Less is a development model where you don’t need to worry about server management — all this is taken care of by a cloud provider that provides you with a Serverless computing service.

That is, we usually have in a cloud:

bare-metal servers somewhere in the data center

on which virtual machines are run

on which we run containers

Serverless computing adds another one on top of these layers, where you can run your function, that is, a minimal deployable unit, without dealing with metal, virtual machines, or containers. You just have a code that can be launched in the cloud in a couple of clicks, and the cloud provider takes over all the infrastructure management tasks. You don’t have to worry about high availability, fail-tolerance, backups, security patches, backups, monitoring, and logging of what happens at the infrastructure level. Even more, at the network level, you don’t need to think about load balancing and how to distribute the load on your service — you just accept requests on the API Gateway or configure a trigger on an event that will trigger your Function. That is, the cloud provider provides you with a Function-as-a-Service, FaaS.

All this is great in cases when a project is just starting, and developers do not have enough experience and/or time to spin up servers and clusters, so, the Time-to-Market is reduced. Or when the project is just testing its system operation model in general, to implement your architecture quickly and painlessly.

“Launch, and enjoy!”

In addition, FaaS has a different service payment model: instead of paying for running servers regardless of whether they are doing any work or just sitting idle, with FaaS you only pay for the time that your function is in progress — Pay-for-Use Services.

So you:

create a function

configure the events on which this function will be triggered (still don’t pay anything)

when the event occurs — it triggers the launch of the function (here you already pay while it is executed)

after the function is finished, it “collapses” and billing for it stops until the next run

FaaS providers include Amazon Web Services with its AWS Lambda, Microsoft Azure and Azure Functions, Google Cloud and Cloud Functions, and IBM Cloud with its IBM Cloud Functions.

Learn more about Serverless in the CNCF Serverless Whitepaper.

Serverless use cases

The Serverless model will be ideal for solutions that can work asynchronously and do not need to save state, that is, they are Stateless systems.

For example, it can be a function in AWS that when creating a new EC2 in the account will automatically add tags to it, such as AWS: Lambda — copy EC2 tags to its EBS, part 2 — create a Lambda function when we use CloudWatch, which when creating EC2 creates an event that triggers the function, passing to it the ID of the EC2-instance as an argument, the disks for which tags should be added: the function is started according to this trigger, adds tags, and stops until the next call.

Self-hosted serverless

To use the Serverless model, it is not necessary to be tied to a FaaS provider, instead, you can run a self-hosted service, for example, in your Kubernetes cluster, and thus avoid vendor lock.

Such solutions include:

Kubeless, Iron Functions, Space Cloud, OpenLambda, Funktion, OpenWhisk, and Fn Project: currently more dead than alive, although some still have updates

Fission: has releases, i.e. develops, but takes only 2%

OpenFaaS: used in 10% of cases

Knative: currently the most popular (27%) and most actively developing project

Knative vs AWS Lambda

But why bother running your own Serverless at all?

you have the opportunity to bypass the limits set by the provider — see AWS Lambda quotas

you avoid vendor lock, that is, you have an opportunity to quickly move to another provider or use multi-cloud architecture for greater reliability

you have more options for monitoring, tracing, and working with logs because in Kubernetes we can do everything, unlike AWS Lambda and its binding to CloudWatch

Bu the way, Knative is able to run functions developed for AWS Labmda thanks to its Knative Lambda Runtimes.

Knative components and architecture

Knative has two main components — Knative Serving and Knative Eventing.

Knative uses Istio for network, route, and audit control (although there may be another, see Configuring the ingress gateway).

Knative Serving

Knative Serving is responsible for container deployment, updates, networking, and autoscaling.

The work of Knative Serving can be represented as follows:

Traffic from a client comes to Ingress, and depending on the request, it is sent to a specific Knative Service, which is Kubernetes Service and Kubernetes Deployment.

Depending on the configuration of a specific Knative Service, traffic can be distributed between different revisions, e.g. versions of the application itself.

KPA — Knative Pod Autoscaler, checks the number of requests and, if necessary, adds new pods to the Deployment. If there is no traffic, KPA scales pods to zero, and when new requests from clients appear, it starts pods and scales them depending on the number of requests that need to be processed.

The main resources types:

Service:

service.serving.knative.devresponsible for the entire lifecycle of your workload (i.e. deployment and related resources) – controls the creation of dependent resources such as routes, configurations, revisionsRoutes:

route.serving.knative.devresponsible for the communication between the endpoint and revisionsConfigurations:

configuration.serving.knative.devis responsible for the desired state of the deployment and creates revisions to which traffic will fallRevisions:

revision.serving.knative.devis a point-in-time snapshot of the code and configuration for each change in the workload

See Resource Types.

Knative Eventing

Knative Eventing is responsible for the asynchronous communication of the distributed parts of your system. Instead of making them dependent on each other, they can create events (so they are event producers) that will be received by another component of the system (event consumers or subscribers, or in Knative terminology — sinks), that is, implement an event-driven architecture.

Knative Eventing uses standard HTTP POST requests to send and receive such events, which must conform to the CloudEvents specification.

Knative Eventing allows you to create:

Source to Sink: a Source (event producers) sends an event to a Sink, which processes it. The Source role can be PingSource, APIServerSource (Kubernetes API events), Apache Kafka, GitHub, etc

Channel and Subscription: create an event pipe, when the event, after reaching the Channel, is immediately sent to the subscriber

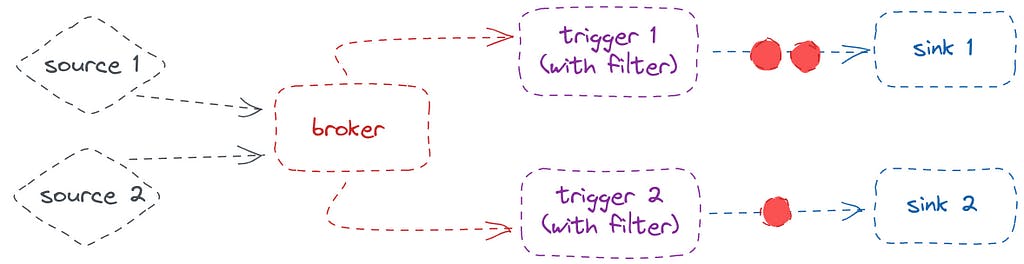

Broker and Trigger: is an event mesh — an event that reaches the Broker, which has one or more Triggers, which has filters, depending on which these events will be received from the Broker

Install Knative in Minikube

Knative components can be deployed to Kubernetes by using YAML manifests (see Install Knative Serving by using YAML and Install Knative Eventing by using YAML ), using the Knative Operator, or using the Knative Quickstart plugin to the Knative CLI.

For now, the quickstart plugin is suitable for us, so let’s use it to launch Knative in Minikube.

Install Knative CLI

See Installingkn.

For Arch-based distributions — with the help of the pacman from the repository:

$ sudo pacman -S knative-client

Check it:

$ kn version

Version:

Build Date:

Git Revision:

Supported APIs:

* Serving

- serving.knative.dev/v1 (knative-serving v0.34.0)

* Eventing

- sources.knative.dev/v1 (knative-eventing v0.34.1)

- eventing.knative.dev/v1 (knative-eventing v0.34.1)

Running Knative with the Quickstart plugin

See kn-plugin-quickstart.

Find the latest version on the release page, select the desired version, in my case it will be kn-quickstart-linux-amd64, and download it to the directory that is present in the $PATH:

$ wget -O /usr/local/bin/kn-quickstart https://github.com/knative-sandbox/kn-plugin-quickstart/releases/download/knative-v1.8.2/kn-quickstart-linux-amd64

$ chmod +x /usr/local/bin/kn-quickstart

Check if the plugin was found by the CLI (the CLI even has autocomplete):

$ kn plugin list

- kn-quickstart : /usr/local/bin/kn-quickstart

Check the plugin:

$ kn quickstart — help

Get up and running with a local Knative environment

Usage:

kn-quickstart [command]

…

The Quickstart plugin will install Kourier instead of Istio, as well as will create a Minikube cluster and install Knative Serving with sslip.io as DNS.

Let’s start:

$ kn quickstart minikube

Running Knative Quickstart using Minikube

Minikube version is: v1.29.0

…

🏄 Done! kubectl is now configured to use “knative” cluster and “default” namespace by default

To finish setting up networking for minikube, run the following command in a separate terminal window:

minikube tunnel — profile knative

The tunnel command must be running in a terminal window any time when using the knative quickstart environment.

Press the Enter key to continue

…

In another terminal, create a minikube tunnel for access from the host machine:

$ minikube tunnel — profile knative &

Go back to the previous window:

…

🍿 Installing Knative Serving v1.8.5 …

…

🕸 Installing Kourier networking layer v1.8.3 …

…

🕸 Configuring Kourier for Minikube…

…

🔥 Installing Knative Eventing v1.8.8 …

…

🚀 Knative install took: 3m23s

🎉 Now have some fun with Serverless and Event Driven Apps!

Check Minikube clusters:

$ minikube profile list

| — — — — -| — — — — — -| — — — — -| — — — — — — — | — — — | — — — — -| — — — — -| — — — -| — — — — |

| Profile | VM Driver | Runtime | IP | Port | Version | Status | Nodes | Active |

| — — — — -| — — — — — -| — — — — -| — — — — — — — | — — — | — — — — -| — — — — -| — — — -| — — — — |

| knative | docker | docker | 192.168.49.2 | 8443 | v1.24.3 | Running | 1 | |

| — — — — -| — — — — — -| — — — — -| — — — — — — — | — — — | — — — — -| — — — — -| — — — -| — — — — |

Namespaces in Kubernetes:

$ kubectl get ns

NAME STATUS AGE

default Active 7m30s

knative-eventing Active 5m54s

knative-serving Active 6m43s

kourier-system Active 6m23s

…

Pods in the kourier-system namespace:

$ kubectl -n kourier-system get pod

NAME READY STATUS RESTARTS AGE

3scale-kourier-gateway-7b89ff5c79-hwmpr 1/1 Running 0 7m26s

And in the knative-eventing:

$ kubectl -n knative-eventing get pod

NAME READY STATUS RESTARTS AGE

eventing-controller-5c94cfc645–8pxgk 1/1 Running 0 7m10s

eventing-webhook-5554dc76bc-656p4 1/1 Running 0 7m10s

imc-controller-86dbd688db-px5z4 1/1 Running 0 6m39s

imc-dispatcher-5bfbdfcd85-wvpc7 1/1 Running 0 6m39s

mt-broker-controller-78dcfd9768-ttn7t 1/1 Running 0 6m28s

mt-broker-filter-687c575bd4-rpzk7 1/1 Running 0 6m28s

mt-broker-ingress-59d566b54–8t4x7 1/1 Running 0 6m28s

And knative-serving:

$ kubectl -n knative-serving get pod

NAME READY STATUS RESTARTS AGE

activator-66b65c899d-5kqsm 1/1 Running 0 8m17s

autoscaler-54cb7cd8c6-xltb9 1/1 Running 0 8m17s

controller-8686fd49f-976dk 1/1 Running 0 8m17s

default-domain-mk8tf 0/1 Completed 0 7m34s

domain-mapping-559c8cdcbb-fs7q4 1/1 Running 0 8m17s

domainmapping-webhook-cbfc99f99-kqptw 1/1 Running 0 8m17s

net-kourier-controller-54f9c959c6-xsgp8 1/1 Running 0 7m57s

webhook-5c5c86fb8b-l24sh 1/1 Running 0 8m17s

Now let’s see what Knative can do.

Knative Functions

Knative Functions allows you to quickly develop functions without having to write a Dockerfile or even have deep knowledge of Knative and Kubernetes.

Add one more plugin — kn-func:

$ wget -O /usr/local/bin/kn-func https://github.com/knative/func/releases/download/knative-v1.9.3/func_linux_amd64

$ chmod +x /usr/local/bin/kn-func

Check if the plugin was found by CLI:

$ kn plugin list

- kn-func : /usr/local/bin/kn-func

- kn-quickstart : /usr/local/bin/kn-quickstart

Log in to the Docker Registry (although if you don’t do it now, Knative will ask for a login during deployment):

$ docker login

func create

Let’s use func create to create a Python function, see Creating a function :

$ kn func create -l python hello-world

Created python function in /home/setevoy/Scripts/Knative/hello-world

A directory with its code will be created on the working machine:

$ ll hello-world/

total 28

-rw-r — r — 1 setevoy setevoy 28 Apr 5 12:36 Procfile

-rw-r — r — 1 setevoy setevoy 862 Apr 5 12:36 README.md

-rwxr-xr-x 1 setevoy setevoy 55 Apr 5 12:36 app.sh

-rw-r — r — 1 setevoy setevoy 1763 Apr 5 12:36 func.py

-rw-r — r — 1 setevoy setevoy 390 Apr 5 12:36 func.yaml

-rw-r — r — 1 setevoy setevoy 28 Apr 5 12:36 requirements.txt

-rw-r — r — 1 setevoy setevoy 258 Apr 5 12:36 test_func.py

File func.yaml describes its configuration:

$ cat hello-world/func.yaml

specVersion: 0.35.0

name: hello-world

runtime: python

registry: “”

image: “”

imageDigest: “”

created: 2023–04–05T12:36:46.022291382+03:00

build:

buildpacks: []

builder: “”

buildEnvs: []

run:

volumes: []

envs: []

deploy:

namespace: “”

remote: false

annotations: {}

options: {}

labels: []

healthEndpoints:

liveness: /health/liveness

readiness: /health/readiness

And in the func.py - the code itself, which will be executed:

$ cat hello-world/func.py

from parliament import Context

from flask import Request

import json

#parse request body, json data or URL query parameters

def payload_print(req: Request) -> str:

…

func run

func run allows you to test how a feature will work without having to deploy it to Knative. To do this, Knative CLI will create an image using your container runtime, and run it. See Running a function.

Start by specifying the path to the code:

$ kn func run — path hello-world/

🙌 Function image built: docker.io/setevoy/hello-world:latest

Function already built. Use — build to force a rebuild.

Function started on port 8080

Check Docker containers:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3869fa00ac51 setevoy/hello-world:latest “/cnb/process/web” 52 seconds ago Up 50 seconds 127.0.0.1:8080->8080/tcp sweet_vaughan

And try with curl:

$ curl localhost:8080

{}

In the func run output:

Received request

GET http://localhost:8080/ localhost:8080

Host: localhost:8080

User-Agent: curl/8.0.1

Accept: */*

URL Query String:

{}

Or you can check with func invoke:

$ kn func invoke — path hello-world/

Received response

{“message”: “Hello World”}

And the function’s output:

$ Received request

POST http://localhost:8080/ localhost:8080

Host: localhost:8080

User-Agent: Go-http-client/1.1

Content-Length: 25

Content-Type: application/json

Accept-Encoding: gzip

Request body:

{“message”: “Hello World”}

func deploy

func deploy will create an image, push it to the registry, and deploy it to Kubernetes as a Knative Service.

If you make changes to the code or just execute it func build, then a new revision will be created for the function in Knative, to which the appropriate route will be switched:

$ kn func deploy — build — path hello-world/ — registry docker.io/setevoy

🙌 Function image built: docker.io/setevoy/hello-world:latest

✅ Function updated in namespace “default” and exposed at URL:

http://hello-world.default.10.106.17.160.sslip.io

Check the Pod in the default namespace:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-world-00003-deployment-74dc7fcdd-7p6ql 2/2 Running 0 9s

Kubernetes Service:

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

example-broker-kne-trigger-kn-channel ExternalName <none> imc-dispatcher.knative-eventing.svc.cluster.local 80/TCP 33m

hello-world ExternalName <none> kourier-internal.kourier-system.svc.cluster.local 80/TCP 3m50s

hello-world-00001 ClusterIP 10.109.117.53 <none> 80/TCP,443/TCP 4m1s

hello-world-00001-private ClusterIP 10.105.142.206 <none> 80/TCP,443/TCP,9090/TCP,9091/TCP,8022/TCP,8012/TCP 4m1s

hello-world-00002 ClusterIP 10.107.71.130 <none> 80/TCP,443/TCP 2m58s

hello-world-00002-private ClusterIP 10.108.40.73 <none> 80/TCP,443/TCP,9090/TCP,9091/TCP,8022/TCP,8012/TCP 2m58s

hello-world-00003 ClusterIP 10.103.142.53 <none> 80/TCP,443/TCP 2m37s

hello-world-00003-private ClusterIP 10.110.95.58 <none> 80/TCP,443/TCP,9090/TCP,9091/TCP,8022/TCP,8012/TCP 2m37s

Or using the Knative CLI:

$ kn service list

NAME URL LATEST AGE CONDITIONS READY REASON

hello-world http://hello-world.default.10.106.17.160.sslip.io hello-world-00003 4m41s 3 OK / 3 True

Or you can get a resource kvcs with the kubectl:

$ kubectl get ksvc

NAME URL LATESTCREATED LATESTREADY READY REASON

hello http://hello.default.10.106.17.160.sslip.io hello-00001 hello-00001 True

hello-world http://hello-world.default.10.106.17.160.sslip.io hello-world-00003 hello-world-00003 True

Call our function

$ kn func invoke — path hello-world/

Received response

{“message”: “Hello World”}

And after a minute, the corresponding Pod will be killed, that is, the Deployment will be set to zero:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-world-00003-deployment-74dc7fcdd-7p6ql 2/2 Terminating 0 79s

Knative Serving

Functions are great, but we’re kind of engineers and we’re interested in something closer to Kubernetes, so let’s take a look at Knative Service.

As already mentioned, Knative Service is a “full workload”, including Kubernetes Service, Kubernetes Deployment, Knative Pod Autoscaler, and the corresponding routes and configs. See Deploying a Native Service.

Here we will use the YAML manifest (although it is also possible kn service create), which describes the image from which the Pod will be created:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: hello

spec:

template:

spec:

containers:

- image: gcr.io/knative-samples/helloworld-go

ports:

- containerPort: 8080

env:

- name: TARGET

value: "World"

Let’s deploy to Kubernetes:

$ kubectl apply -f knative-hello-go-svc.yaml

service.serving.knative.dev/hello created

Check the Knative Service:

$ kn service list

NAME URL LATEST AGE CONDITIONS READY REASON

hello http://hello.default.10.106.17.160.sslip.io hello-00001 70s 3 OK / 3 True

And try with curl:

$ curl http://hello.default.10.106.17.160.sslip.io

Hello World!

Check Pods:

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-00001-deployment-5897f54974-wdhq8 2/2 Running 0 10s

And Kubernetes Deployments:

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

hello-00001-deployment 1/1 1 1 19m

hello-world-00001-deployment 0/0 0 0 26m

hello-world-00002-deployment 0/0 0 0 25m

hello-world-00003-deployment 0/0 0 0 24m

Autoscaling

Knative Serving uses its own Knative Pod Autoscaler (KPA), although you can create a “classic” Horizontal Pod Autoscaler (HPA) instead.

KPA supports scale to zero but is not capable of CPU-based autoscaling. In addition, in KPA we can use concurrency or requests per second metrics for scaling.

HPA, on the other hand, is capable of CPU-based autoscaling (and much more) but does not know how to scale to zero, and for concurrency or RPS it needs additional settings (see Kubernetes: HorizontalPodAutoscaler — overview and examples ).

We already have KPAs that were created at func deploy and during the creation of our Knative Service:

$ kubectl get kpa

NAME DESIREDSCALE ACTUALSCALE READY REASON

hello-00001 0 0 False NoTraffic

hello-world-00001 0 0 False NoTraffic

hello-world-00002 0 0 False NoTraffic

hello-world-00003 0 0 False NoTraffic

Run curl in 10 threads:

$ seq 1 10 | xargs -n1 -P 10 curl http://hello.default.10.106.17.160.sslip.io

And in another terminal window, look at kubectl get pod --watch:

$ kubectl get pod -w

NAME READY STATUS RESTARTS AGE

hello-00001-deployment-5897f54974–9lxwl 0/2 ContainerCreating 0 1s

hello-00001-deployment-5897f54974–9lxwl 1/2 Running 0 2s

hello-00001-deployment-5897f54974–9lxwl 2/2 Running 0 2s

“Wow, it scaled!” :-)

Knative Revisions

Thanks to Istio (or Kourier, or Kong), Knative is able to distribute traffic between different versions (revisions) of the system, which allows you to perform blue/green or canary deployments.

See Traffic splitting.

In our Knative Service, we set an environment variable $TARGET with the value World - let's replace it with Knative :

$ kn service update hello — env TARGET=Knative

Updating Service ‘hello’ in namespace ‘default’:

0.025s The Configuration is still working to reflect the latest desired specification.

2.397s Traffic is not yet migrated to the latest revision.

2.441s Ingress has not yet been reconciled.

2.456s Waiting for load balancer to be ready

2.631s Ready to serve.

Service ‘hello’ updated to latest revision ‘hello-00002’ is available at URL:

http://hello.default.10.106.17.160.sslip.io

Check revisions:

$ kn revision list

NAME SERVICE TRAFFIC TAGS GENERATION AGE CONDITIONS READY REASON

hello-00002 hello 100% 2 20s 4 OK / 4 True

hello-00001 hello 1 24m 3 OK / 4 True

And try to call the endpoint:

$ curl http://hello.default.10.106.17.160.sslip.io

Hello Knative!

Now let’s try to divide the traffic — send 50 percent to the previous revision, and 50 percent to the new one:

$ kn service update hello — traffic hello-00001=50 — traffic @latest=50

Check revisions again:

$ kn revision list

NAME SERVICE TRAFFIC TAGS GENERATION AGE CONDITIONS READY REASON

hello-00002 hello 50% 2 115s 4 OK / 4 True

hello-00001 hello 50% 1 26m 4 OK / 4 True

Let’s try again curl, repeat several times:

$ curl http://hello.default.10.106.17.160.sslip.io

Hello Knative!

$ curl http://hello.default.10.106.17.160.sslip.io

Hello World!

$ curl http://hello.default.10.106.17.160.sslip.io

Hello Knative!

$ curl http://hello.default.10.106.17.160.sslip.io

Hello World!

Nice!

Knative Eventing

Knative Eventing is a set of services and resources that allow us to build event-driven applications when Functions are called by some event. To do this, we can connect sources that will generate these events and sinks, that is, “consumers” that react to these events.

In the further Quickstart documentation, there is an example of working with Broker and Trigger using cloudevents-player, but in my opinion, the capabilities of Knative Eventing are not really demonstrated there, so let’s choose a little “the hard way”.

As already mentioned above, Knative supports three types of implementation of the event-driven system — Source to Sink, Channel and Subscription, and Broker and Trigger.

Source to Sink

Source to Sink is the most basic model, created using Source and Sink resources, where Source is an Event Producer, and Sink is a resource that can be called or sent messages to.

Examples of Sources include APIServerSource (Kubernetes API server), GitHub and GitLab, RabbitMQ, and others, see Event sources.

The role of a Sink can be Knative Service, Channel, or Broker (that is, a “sink” where we “drain” our events). Although when building the Source to Sink model, the role of the sink should be Knative Service — we will talk about Channel and Broker later.

Channel and Subscription

Channel and Subscription is an even pipe (like a pipe in bash when we via the | can redirect the stdout of one program to the stdin of another).

Here, a Channel is an interface between an event source and a subscriber of this channel. At the same time, the channel is a type of sink, as it can store events and then give them to its subscribers.

Knative supports three types of channels:

In-memory Channel

Apache Kafka Channel

Google Cloud Platform Pub-sub Channel

In-memory Channel is the default type and cannot retrieve events or store them persistently — use types like Apache Kafka Channel for this.

Next, a Subscription is responsible for connecting the Channel with the corresponding Knative Service — Services subscribe to the Channel through Subscription and start receiving messages from the Channel.

Broker and Trigger

Broker and Trigger represent an event mesh model, allowing events to be transmitted to the necessary services.

Here, a Broker collects events from various sources providing an input gateway for them, and a Trigger is actually a Subscription, but with the ability to filter which events it will receive.

An example of creating a Source to Sink

Let’s create a Knative Service, which will be our sink, that is, the recipient:

$ kn service create knative-hello — concurrency-target=1 — image=quay.io/redhattraining/kbe-knative-hello:0.0.1

…

Service ‘knative-hello’ created to latest revision ‘knative-hello-00001’ is available at URL:

http://knative-hello.default.10.106.17.160.sslip.io

concurrency-target here indicates that our Service can handle only one request at a time. If there are more of them, the corresponding KPA will create additional pods.

We create an Event Source, for example PingSource, which will send a JSON message to our knative-hello Service every minute:

$ kn source ping create knative-hello-ping-source — schedule “* * * * *” — data ‘{“message”: “Hello from KBE!”}’ — sink ksvc:knative-hello

Ping source ‘knative-hello-ping-source’ created in namespace ‘default’.

Check it:

$ kn source list

NAME TYPE RESOURCE SINK READY

knative-hello-ping-source PingSource pingsources.sources.knative.dev ksvc:knative-hello True

And let’s look at the knative-hello Service logs:

$ kubectl logs -f knative-hello-00001-deployment-864756c67d-sk76m

…

2023–04–06 10:23:00,329 INFO [eventing-hello] (executor-thread-1) ce-id=bd1093a9–9ab7–4b79–8aef-8f238c29c764

2023–04–06 10:23:00,331 INFO [eventing-hello] (executor-thread-1) ce-source=/apis/v1/namespaces/default/pingsources/knative-hello-ping-source

2023–04–06 10:23:00,331 INFO [eventing-hello] (executor-thread-1) ce-specversion=1.0

2023–04–06 10:23:00,331 INFO [eventing-hello] (executor-thread-1) ce-time=2023–04–06T10:23:00.320053265Z

2023–04–06 10:23:00,332 INFO [eventing-hello] (executor-thread-1) ce-type=dev.knative.sources.ping

2023–04–06 10:23:00,332 INFO [eventing-hello] (executor-thread-1) content-type=null

2023–04–06 10:23:00,332 INFO [eventing-hello] (executor-thread-1) content-length=30

2023–04–06 10:23:00,333 INFO [eventing-hello] (executor-thread-1) POST:{“message”: “Hello from KBE!”}

For now, that’s all I’d wanted to know about Knative.

It looks quite interesting in general, but it can be with autoscaling and Istio because Istio itself can be a bit painful. Although we already have Knative in production on my current project and haven’t seen any real problems with it yet.

Links on the topic

Overview of self-hosted serverless frameworks for Kubernetes: OpenFaaS, Knative, OpenWhisk, Fission

Deploy AWS lambda function to Kubernetes using Knative and Triggermesh KLR

Originally published at RTFM: Linux, DevOps, and system administration.